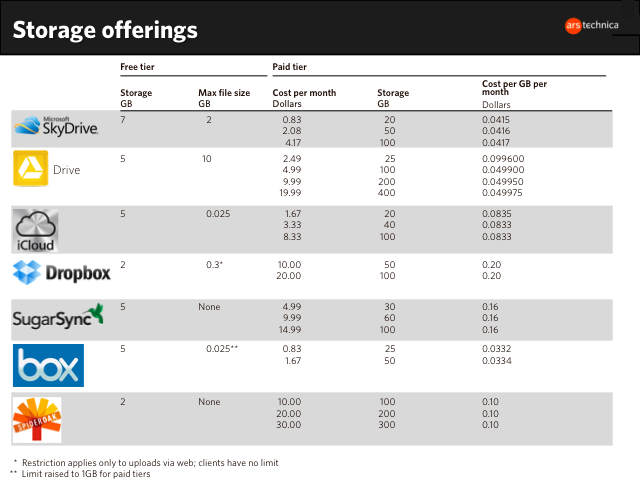

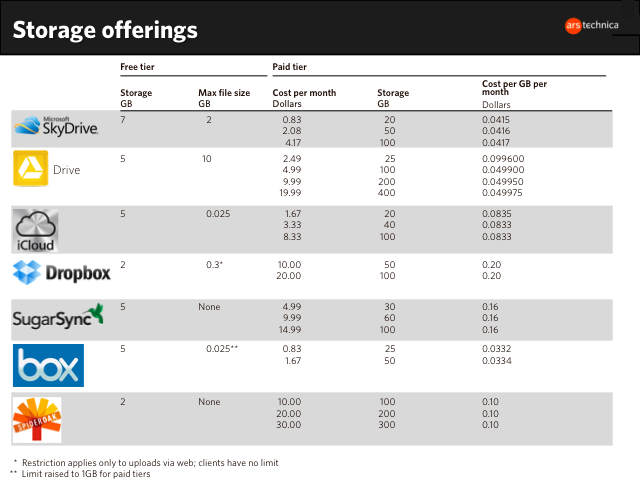

Cloud storage services are cropping up left and right, all enticing their customers with a few gigabytes of storage that sync seemingly anywhere, with any device. We’ve collected some details on the most popular services, including Google Drive, to compare them.

via Cloud storage: a pricing and feature guide for consumers | Ars Technica.

I don’t normally post images here but this chart makes for a quick reference. The linked to article has much more details and worth a read.

What intrigued me about this is the max file size. This is probably set to keep people from building their own file containers (i.e. tar, zip, etc.) — which is what I hoped to do. Dropbox allows for a max file size of 300MB, ICloud 25MB. By building your own file containers gives you more local control over security of said files by allowing you to use your own encryption. 300MB seems suitable for even rather large databases. Apps will have to be more frugal with a 25MB limit. I need to start using my Dropbox account. Will report more on this later.